The 'KPI Progress' project was a multifaceted and rewarding experience during my

internship. In this project, I played a dual role as both a data engineer and a data analyst to

analyze Key Performance Indicator (KPI) data for all employees from 2022 to 2023. The

project involved a comprehensive data engineering process, data cleansing, ETL tasks, and the development of an interactive dashboard to assess individual

KPI progress.

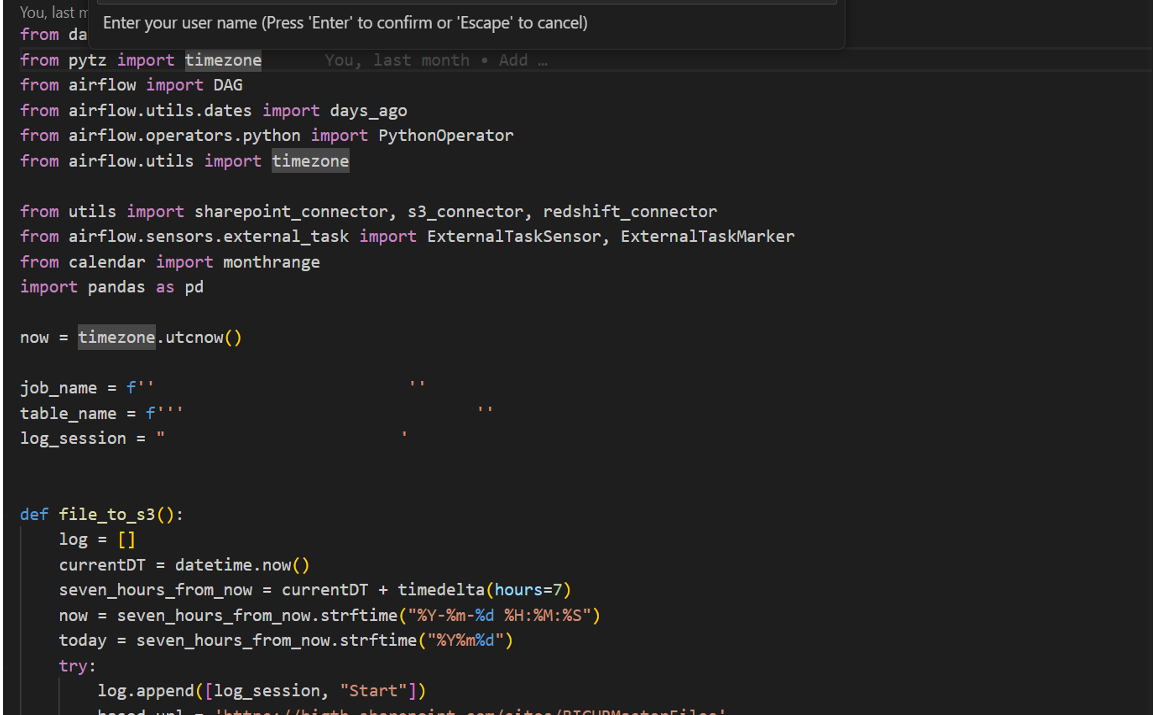

As a data engineer, my first task was to perform data cleansing to ensure the data's

reliability. Utilizing Python in Jupyter Notebook, I applied various data

cleaning techniques to remove inconsistencies, duplicates, and missing values from the KPI

dataset. This step was crucial to ensure a solid foundation for further analysis. Next, I

implemented data upload processes using Python and SQL to transfer the cleansed data to

AWS S3. Then, I utilized AWS Redshift to store and manage the dataset efficiently.

To automate the data processing and refresh the dataset regularly, I employed Apache

Airflow, an open-source platform for orchestrating complex workflows. I created data

pipelines and scheduled them to refresh the KPI data every first ten days of each month. This

approach ensured that the dashboard always displayed the most up-to-date information,

enabling real-time monitoring of KPI progress.

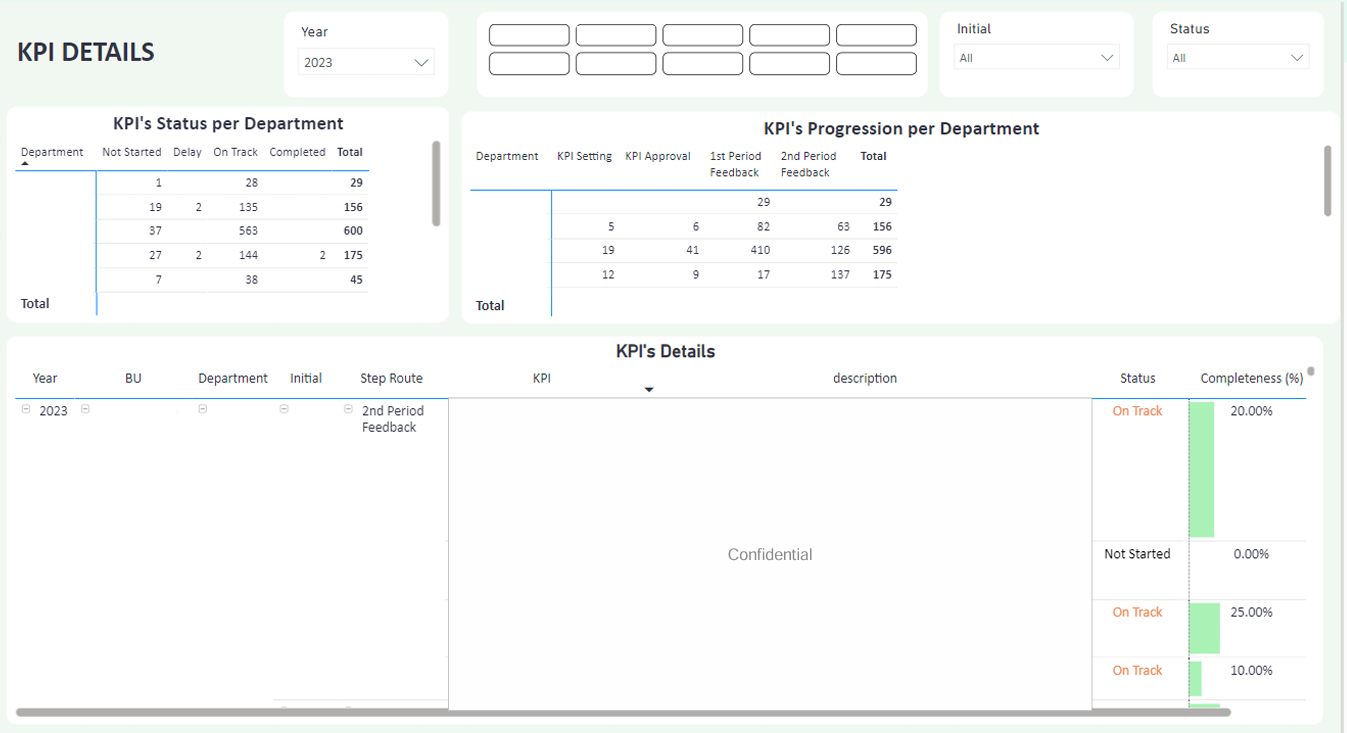

With the data engineering process completed, I transitioned into the role of a data

analyst. Leveraging DAX in Power BI, I designed and developed an interactive dashboard

that allowed each evaluator to track the progress of KPIs.